Sim-Diasca Dataflow HOWTO

| Organisation: | Copyright (C) 2016-2025 EDF R&D |

|---|---|

| Contact: | olivier (dot) boudeville (at) edf (dot) fr |

| Authors: | Olivier Boudeville, Samuel Thiriot |

| Creation Date: | Thursday, February 25, 2016 |

| Lastly updated: | Friday, July 18, 2025 |

| Version: | 2.4.8 |

| Status: | Stable |

| Website: | http://sim-diasca.com |

| Dedication: | For the implementers for Sim-Diasca dataflow-based models. |

| Abstract: | This document describes how dataflows, i.e. data-driven graphs of blocks, are to be defined and evaluated in the Sim-Diasca simulations relying on them. |

Table of Contents

- Foreword

- Usual Organization of the Model Evaluation

- An Alternate Mode of Operation: the Dataflow

- On Dataflows & Experiments

- On Dataflow Processing Units

- On Dataflow Ports, Channels and Buses

- On Dataflow Values

- Logic of the Dataflow Block Activation

- On Dataflow Objects

- On Model Assemblies

- A More Complete Example

- Developing Dataflow Elements in Other Programming Languages

- More Advanced Dataflow Uses

- Experiments

- A General View on the Dataflow Synchronisation

- The World Manager and its Dataflow Object Managers

- The Experiment Manager and its Unit Managers

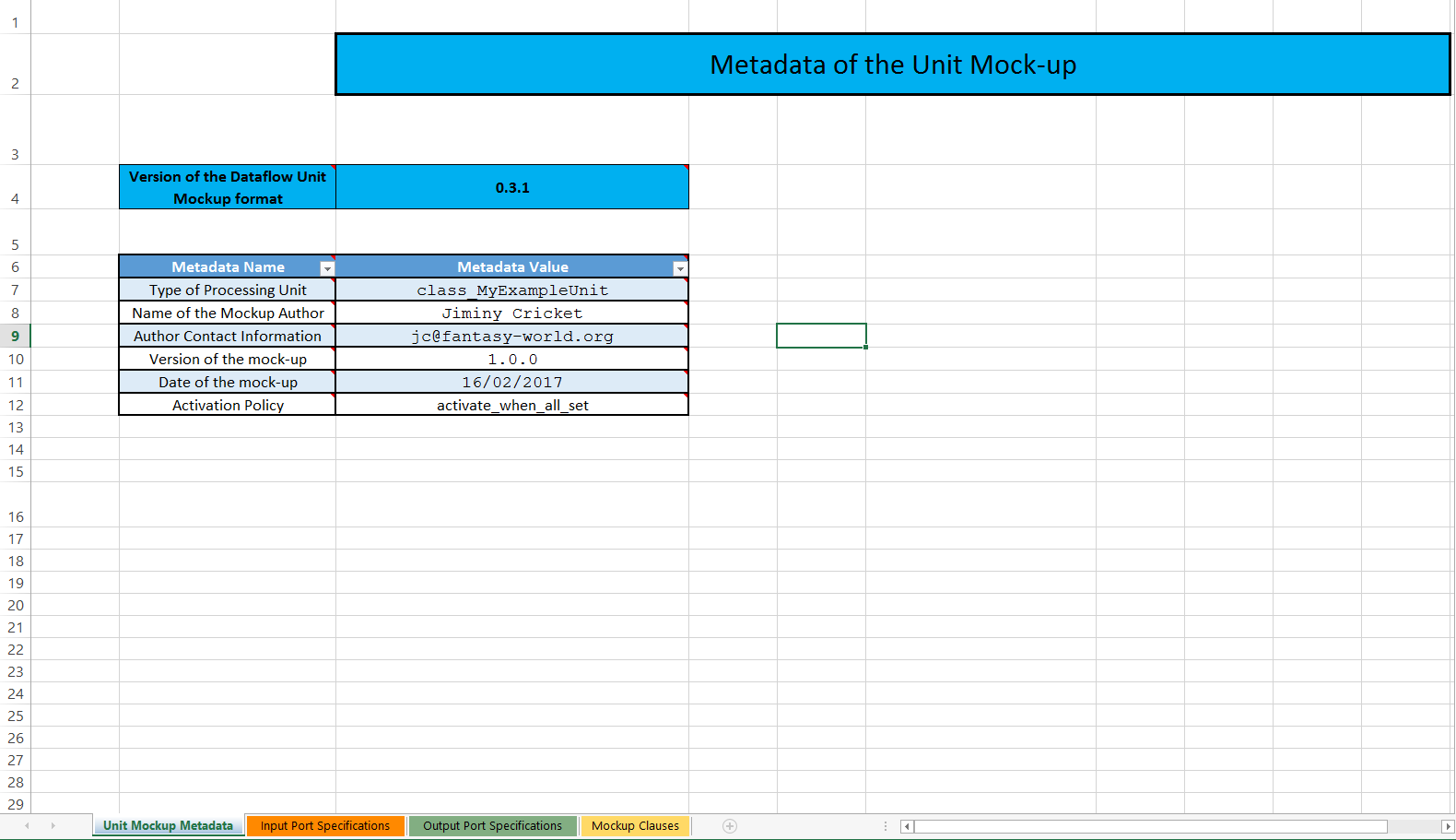

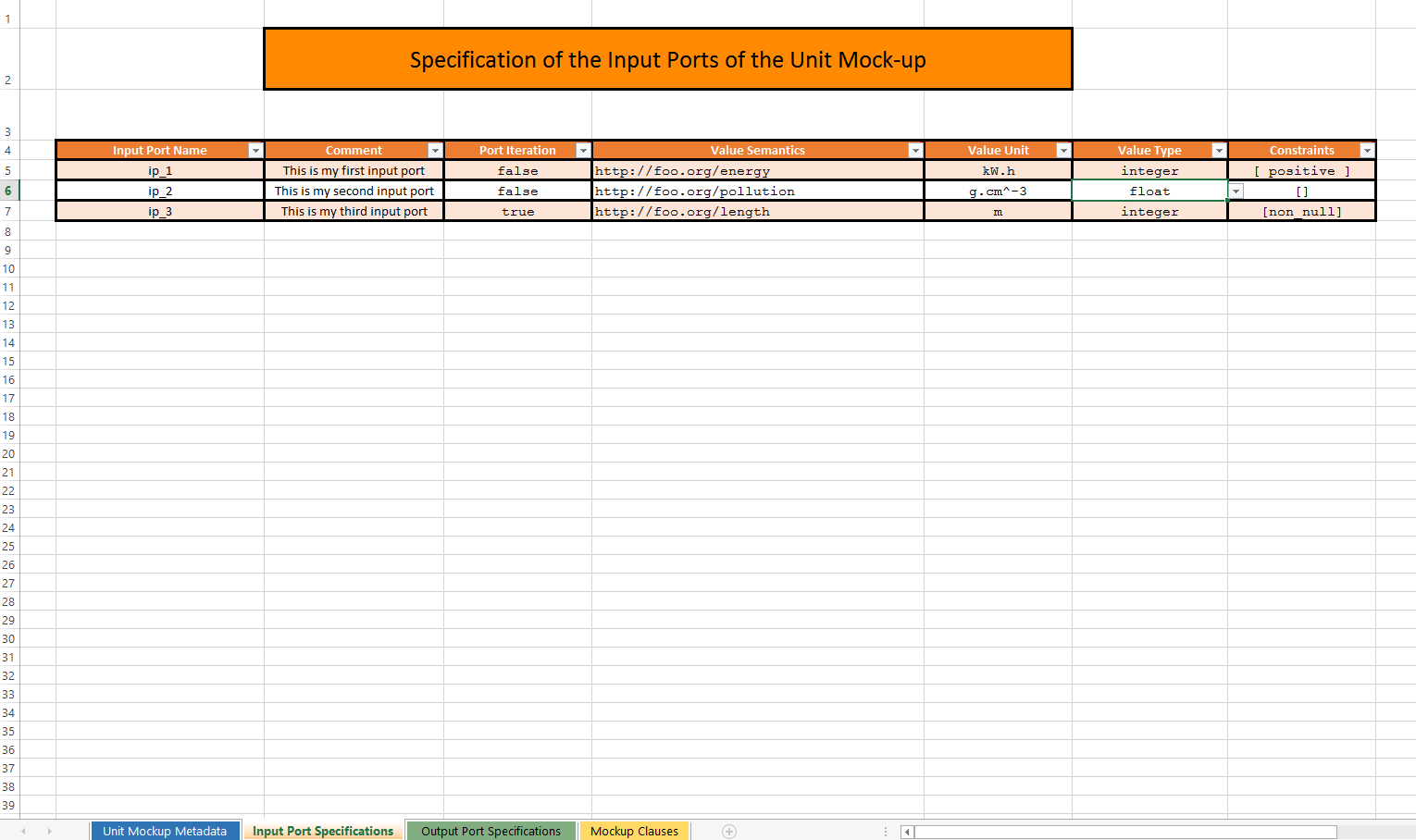

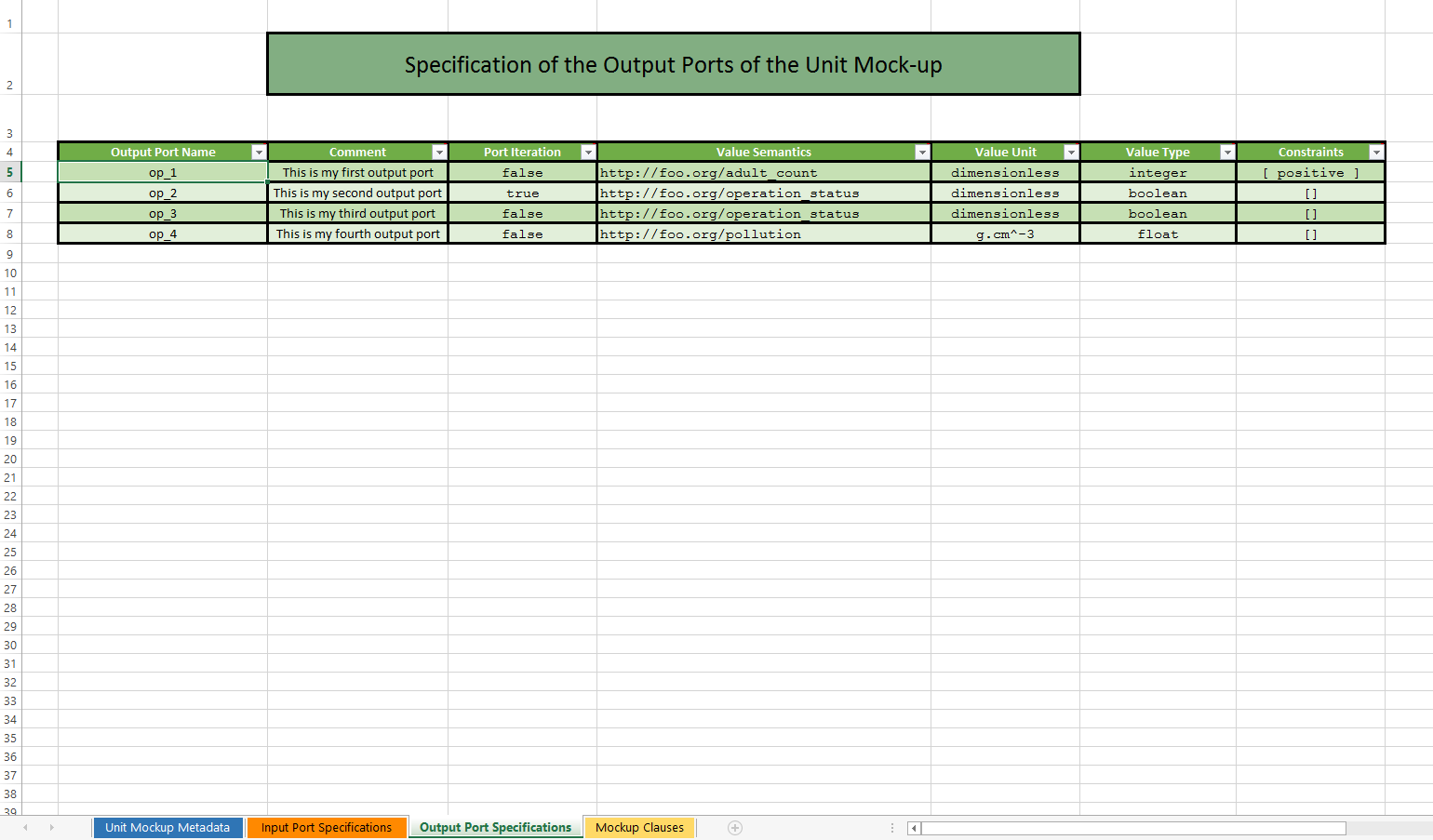

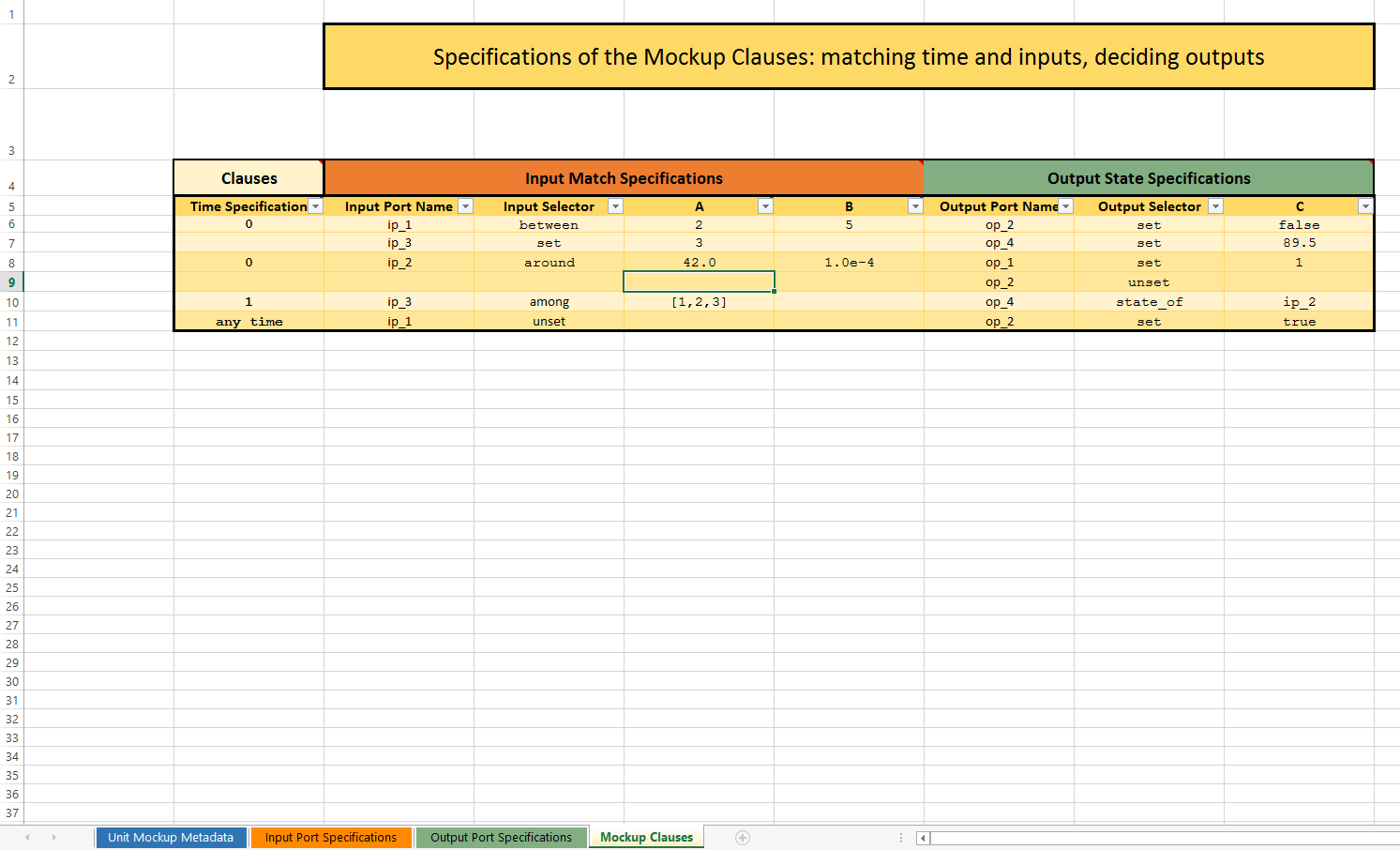

- About Mock-up Units

- Integrating a Model As a Dataflow: a Short Walkthrough

- Preliminary Step (#0): Remembering the Basics

- Step #1: Ensure that the Overall Simulated World Can be Structured As a Dataflow

- Step #2: Determine the Specific Relationships Between the Dataflow and this Model

- Step #3: Break the Black Box Into Actual Dataflow Units

- Step #4: Implement the Corresponding Actual Units

- Step #5: Add the Corresponding Unit Manager(s)

- Requirements For the Dataflow Integration of a Model

- Implementation Section

- Appendices

Foreword

The simulation of complex systems often relies on loosely-coupled agents exchanging signals based on a dynamic, potentially complex applicative protocol over a very flexible scheduling.

However, in some cases, the modelling activity results alternatively in the computations being at least partly described as a static network of interconnected tasks that can send values to each other over channels that applies to a simulated world - i.e. a dataflow.

Both approaches will be detailed and contrasted below, before focusing on how dataflows can be defined and used with Sim-Diasca.

Note

Most of the dataflow-related concepts mentioned in this document are illustrated on a complete, runnable simulation case: the Dataflow Urban Example, whose sources are located in the mock-simulators/dataflow-urban-example directory of the standard Sim-Diasca distribution.

Besides these case-specific elements, the sources of the generic dataflow infrastructure are also available, in the sim-diasca/src/core/src/dataflow directory.

Please feel free to skim in these respective sources for a better practical understanding of the dataflow infrastructure.

Usual Organization of the Model Evaluation

In most simulations of complex systems, the simulated world is sufficiently disaggregated into numerous autonomous model instances (be they named agents or actors) so that the evaluation of their respective behaviours and interactions naturally leads to processing the simulation. In this context, trying to constrain or even hard-code static sequences of events is often neither possible nor desirable.

For example, one can see a city as a set of buildings, roads, people, etc., each with its own state and behaviour, the overall city (including its districts, precincts, etc.) being the byproduct of their varied interactions - a possibly hierarchical, certainly emergent organisation.

This approach is probably the most commonly used when modelling a complex system, hence it is the one natively supported by Sim-Diasca: the target system is meant to be described as a (potentially large) collection of model instances (a.k.a. actors) possibly affected by scenarios and, provided that their respective state and behaviour have been adequately modelled, the engine is able to evaluate them in the course of the simulation, concurrently, while actors feed the probes that are needed in order to generate the intended results.

The (engine-synchronised) interactions between actors are at the very core of these simulations, which are determined by how actors get to know each other, exchange information, opt for a course of action, create or destroy others and, more generally, interact through an implicit overall applicative protocol resulting from the superposition of their individual, respective behaviours.

However other, quite different, organisational schemes can be devised, including the one discussed in this section, the dataflow paradigm.

An Alternate Mode of Operation: the Dataflow

Let's define first what is a dataflow.

Note

A dataflow is a way of describing a set of interdependent processings whose evaluation is driven by the availability of the data they are to handle.

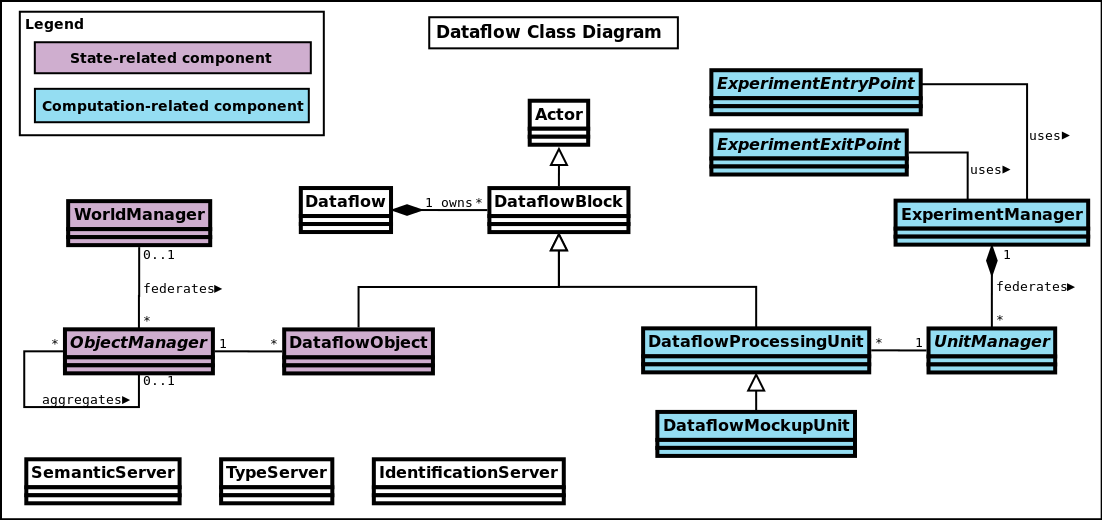

In this more constrained organisation, rather than having actors freely exchanging various symbols and messages according to dynamically-decided patterns, we rely here on quite specialised actors that embody dataflow blocks, which are:

- either dataflow processing units (instances of the DataflowProcessingUnit class), which set and listen for values, through channels that are delimited each by an input port and an output port, and perform associated computations

- or dataflow objects (instances of the DataflowObject class) that stores attributes that can be set and read respectively thanks to their associated input and output ports

All these dataflow blocks and the channels linking them form altogether a graph (whose nodes are the blocks, and whose edges are the channels). This graph is by default:

- statically defined: its structure can be established before the simulation starts

- static: in the general case, its structure is not expected to change in the course of the simulation

- directed: channels are unidirectional, only from an output port of a block to an input port of a block

- acyclic: by following the declared (directed) channels, no path should go through the same block more than once

The graph can be explicit or not: either it is described as a whole (as a single, standalone entity), or it can be merely extrapolated from the union of the channels drawn between the declared blocks.

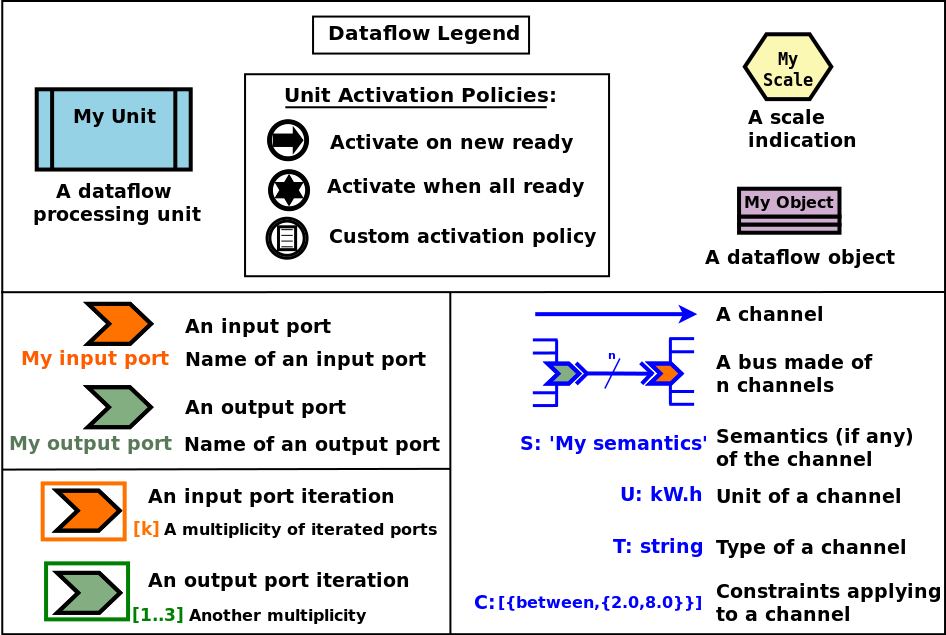

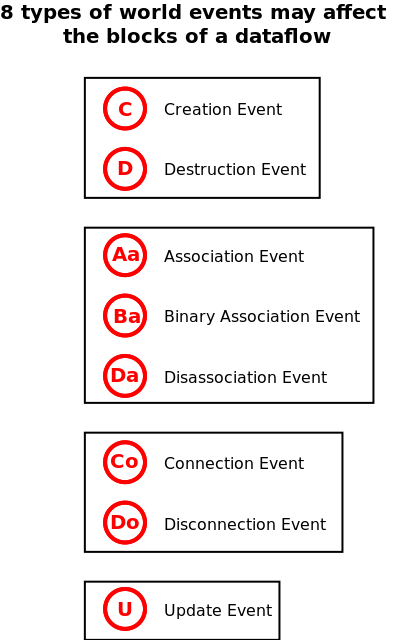

Dataflows of course have an immediate graphical counterpart. The conventional symbols we elected are represented in this key:

By convention, input ports are in orange, output ports in green, dataflow objects in light purple, dataflow units (e.g. processing or mock-up ones) are in light blue and comprise the symbol of their activation policy, and channels are in various shades of blue [1].

| [1] | Please refer to Annex 3: Conventions for the Graphical Representation of Dataflows for more information. |

Still in blue, the SUTC quadruplet:

- the channel Semantics (i.e. the meaning of the conveyed values) can be specified, as an arbitrary domain-specific symbol prefixed with "S:" (like in "S: 'produced heat'"); project conventions may apply, notably in order to adopt the RDF format, like in:

S:'http://foobar.org/urban/1.1/energy/demand'

- the Unit of the value, prefixed with "U:" (e.g. "U: kW.h", or "U: g/Gmol.s^-2"); often the unit information implies a type (described in next point): for example the unit "U: W" implies the type "T: float"; in this case the type information can be safely omitted

- the Type of the values conveyed by the channel, prefixed with "T:" (e.g. "T: string" or "T: {integer,boolean}")

- the Constraints (if any) applying to the exchanged value, as a list of elementary constraints (e.g. "C: [ {between,{2.0,8.0}} ]" means that a single constraint applies to the exchanged values, which is that they must be between 2 and 8)

These SUTC information shall preferably be specified close to the associated channel (if any) or output port.

Unit activation, semantics, units, types and constraints are discussed more in-depth later in this document.

Specifying the names of dataflow units and ports is mandatory.

As a processing unit is in charge of performing a specific task included in a more general computation graph (the dataflow), its name shall reflect that; one may consider that the name of such a unit is implicitly prefixed with a verb like compute_. For example, a processing unit named fuel_intake could be understood as compute_fuel_intake (and we expect it to have at least one output port dealing with fuel intake).

Finally, as some dataflow units have for purpose to aggregate metrics across time and/or space, some scale indication may be given for documentation purposes, enclosed in an hexagon in pale yellow.

The dataflow objects are specifically discussed in a section of their own later in this document.

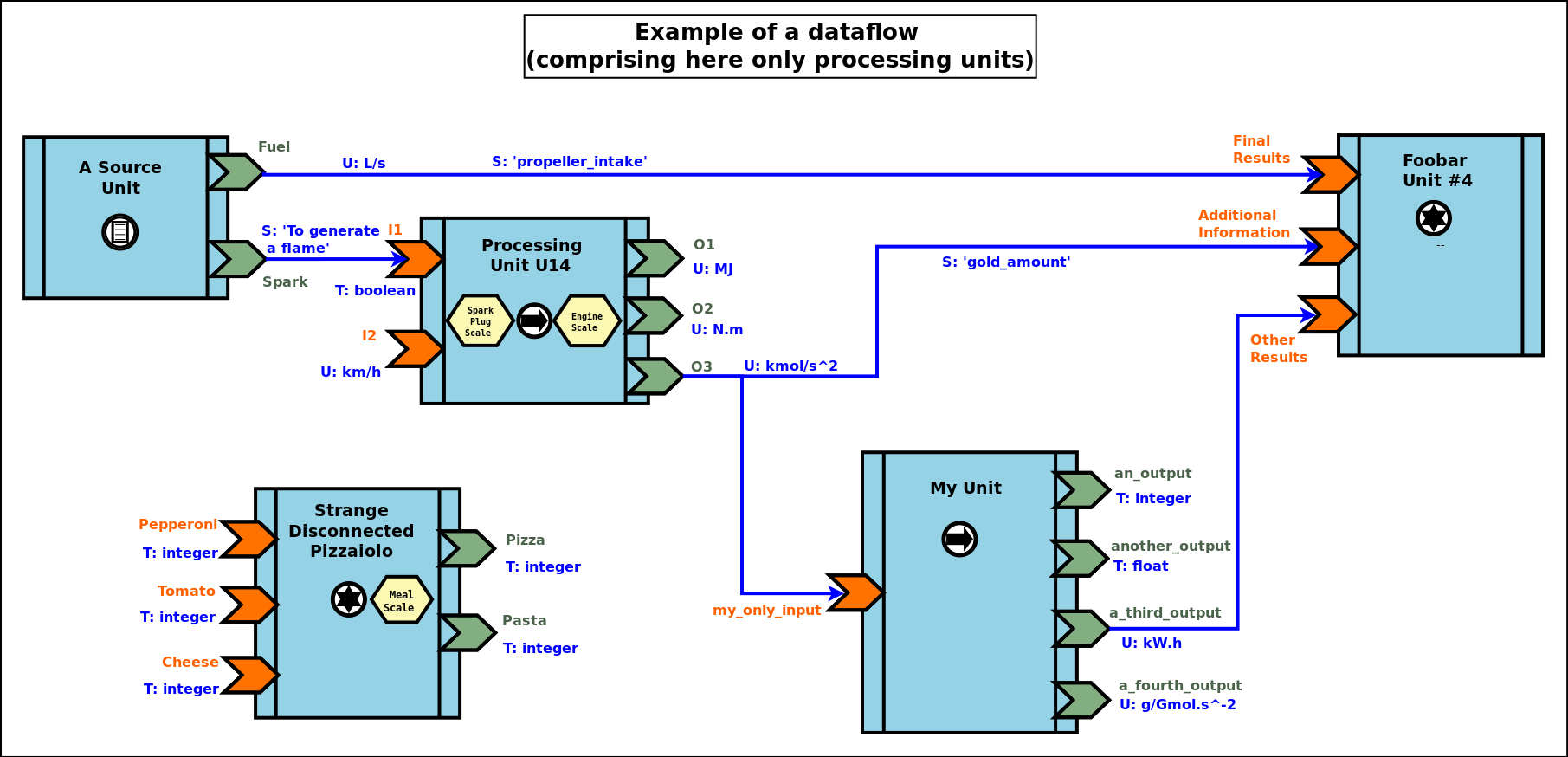

As a result, a dataflow, which shall be interpreted as a graph of computations, may look as this (meaningless) example:

We can see that a dataflow does not need to be fully connected (the blocks may form disjoint subgraphs) and that ports (input and output ones alike) may not be connected either.

The global progress of the computations happens here from left to right.

A more complete example is given later in this document.

Now let's detail a bit all the elements involved.

On Dataflows & Experiments

Dataflow Definition

As mentioned, a dataflow is a graph of computations whose evaluation is driven by the availability of the data they are to handle.

In practice, it is a set of interlinked dataflow blocks, typically dataflow processing units and dataflow objects.

Even though dataflows could remain only implicit data-structures (they would just correspond to an actual set of interlinked dataflow elements), we preferred introducing an actual dataflow class, in order to ease the interaction with such instances and provide a reference point.

So overall operations on a given dataflow (e.g. creations, modifications, report inquiries) shall be operated only through its corresponding federating class_Dataflow instance.

Multiple dataflow instances may exist, and they are collectively managed by the overall experiment manager, introduced later in this document.

Experiment Definition

An experiment corresponds to the overall evaluation task that is to be performed by a (here: dataflow-based) simulation, as it is described by the corresponding simulation case.

For that such an experiment aggregates any number of dataflows, which progress in parallel, typically through a series of steps [2].

During each step, each dataflow instance, based on any update of its input ports, is fully evaluated (i.e. until it reaches a fully stable state).

| [2] | In engine-related terms, an experiment step of the dataflow infrastructure corresponds to a simulation tick of the engine. During such a step, dataflows are evaluated over diascas, resulting on their elements exchanging values, until none of the output port is set anymore. Then the next step (tick) can be evaluated, etc. |

More in-depth information can be found in the Experiments section.

On Dataflow Processing Units

A (processing) unit is, with dataflow objects, the most common type of dataflow block.

A dataflow unit encapsulates a kind of computation. For example, if an energy demand has to be computed in a dataflow, an EnergyDemandUnit processing unit can be defined.

Such a unit is a type, in the sense that it is an abstract blueprint that shall be instantiated in order to rely on actual units to perform the expected computations. Therefore, in our example, a class_EnergyDemandUnit processing unit shall be defined (specified and implemented), so that we can obtain various unit instances out of it in order to populate our dataflow.

As discussed in the next section, each of the instances of a given dataflow unit defines input and output ports.

Note

It shall be noted that, additionally, each processing unit instance benefits from a state of its own (that it may or may not use): the attributes that the unit class introduced are available in order to implement any memory needed by the unit, and of course these attributes will retain their values through the whole lifetime of that instance (hence through simulation ticks and diascas).

So a unit may encapsulate any processing between a pure, stateless function to a more autonomous, stateful, agent.

On Dataflow Ports, Channels and Buses

A port is the only way by which a dataflow block (typically a unit) may interact (propagate a value) with other blocks.

Following rules apply:

- a port is either an input one (listening to the update of a value conveyed by the corresponding channel) or an output one (able to update its corresponding value and notify its registered input listeners); this is reflected by their type (either input_port or output_port)

- each port is named (as a non-empty string [3], e.g. "my foobar port") and no two input ports of a block can bear the same name, nor output ones can (however an input port and an output port of the same block can have the same name - they will be differentiated by their nature)

- a port identifier is defined from a pair made of an identifier of the block that defined it and from the name of that port [4] (e.g. it could be ("My Unit","Port 24"), or based on more technical identifiers)

- a port (input or output) may either hold a value (arbitrary data can be set; the port is then considered as ready, i.e. as set), or not - in which case it holds the unset symbol (the port is then itself considered as unset)

- an output port can be considered as being always unset: as soon as a new value is available, it notifies all its connected input ports and then reverts back to the unset status; therefore the set/unset status can be abstracted out for output ports, which just get punctually activated

- conversely, this status matters for input ports: a block starts with all its input ports to unset, and, each time an input port is notified by an output port, this input port switches to set; how a block is to react depending on none, one, some or all of its input ports being set is discussed below

- an output port will send downstream the value it holds whenever:

- it gets set: exactly one sending will be performed per setting (regardless of the value that is set) to each of the input ports it is linked to; as a result, setting explicitly a port to a value that happens to be the same as the one that it was already holding will nevertheless trigger a sending (therefore "not setting a value" vs "setting the current value again" are operations that differ semantically)

- it gets connected (i.e. a channel is created from this output port to an input port) whereas this output port has already been set at least once in the past; then, on channel creation, the latest value it sent will be re-emitted, only to the newly connected input port

- ports can convey arbitrary data (i.e. any Erlang term), yet any given port has a type, which defines what are the licit the values that it can hold (e.g. "this port can be set to any pair of non-negative floats") [5]

- a block can declare any number of output ports (possibly none, in which case it is an exit block, a sink)

- a block can declare any number of input ports (possibly none, in which case it is an entry block, a source)

- a channel links exactly one output port to one input port, and these two ports shall have the same types, units and semantics (which are the ones of the channel)

- any number of channels may originate from an output port (possibly none); when an output port is being set (i.e. when it performs a punctual transition from unset to set), then all the input ports listening to it are notified of that [6]

- an input port may be the target of up to one channel; if no channel feeds a port, then it remains in the unset state

- a port records the timestamp (in simulation time) of the last notification (possibly none) it either sent (for output ports) or received (for input ones)

- a bus corresponds to a set of channels ; it shall be seen, at least currently, only as a graphical convention introduced in order to avoid that too many parallel channels are drawn, which would obfuscate the representation of a dataflow (note that no bus per se is considered when evaluating the dataflow; the runtime is only aware of ports being connected to others, so buses - and even channels - are abstracted out)

| [3] | The only restriction is that the "_iterated_" substring cannot exist in a user-defined port name (so for example "foo_iterated_bar_42" will be rejected by the dataflow infrastructure). |

| [4] | A port identifier is typed as -type port_id() :: {dataflow_object_pid(),port_name()}. where dataflow_object_pid() is a PID (the one of the block) and port_name() is a binary string. |

| [5] | The dataflow system may or may not check that typing information. |

| [6] | Indeed the onInputPortSet/3 actor oneway of their respective block is executed, specifying the port identifier of the triggered input port and the corresponding timestamped value (specifying the tick and diasca of the notification). Generally this information is not of interest for the block implementer, as defining for example a unit activation policy allows to handle automatically input ports being triggered. |

Even if conceptually it is sufficient that only the output port knows the input ports it may notify (and not the other way round), technically the input ports also know the (single, if any) output port that may notify them, for example for a simpler support of unsubscribing schemes.

On Dataflow Values

We saw that a value designates a piece of data carried by a channel, from an output port to any number of input ports.

Various information are associated to the output ports and to the values they carry (they are metadata), notably the SUTC quadruplet (for Semantics-Units-Type-Constraints), which the next sections detail in turn, yet in a different order for the sake of clarity - roughly from the most concrete to the highest-level.

Type of a Value

Type Basics

A channel is typed, in the sense that all the values that it conveys shall be of the same type (in terms of programming language; e.g. a floating-point value), which is the one specified for the channel. The T in SUTC stands for this type information.

The name of a type must be a series of alphanumerical characters, in lowercase, starting with an alphabetical one, and possibly containing underscore (_) characters; example of a type name: integer or this_is_my_type_name_221.

The complete type specification in a dataflow (typically used to describe a port) shall be prefixed with "T:" (for example "T: integer" would mean that the corresponding port handles values of type integer).

In the absence of unit information (see next section), the type information is mandatory and must be specified by both port endpoints. It may or may not be checked, at build and/or run time.

Note

The specified typing information is currently not used: as a consequence, the values conveyed by the dataflow are not yet checked against their declared type.

A set of built-in and derived types is provided, and many port specifications rely directly on them in order to define simple, scalar, atomic values (often floating-point ones) - as opposed to compound ones. Specifications may also define and use any additional type that they may need.

Moreover, if deemed useful, more complex data structures may be specified, based on polymorphic types like tuples (denoted as {}), on lists (denoted as []) or even, in the future, on associative tables.

All these constructs enable the specification of all the typing information needed by the user in order to describe, in computing terms, the values exchanged over the dataflow.

Let's discuss the basic typing primitives first, i.e. the built-in types.

Built-in Types

Following built-in types are available (they map to Erlang native types; some related technical details put between parentheses or in footnotes may be safely ignored by the reader):

| Name of the built-in type | Description | Example value |

|---|---|---|

| integer | Any (unbounded; positive or negative) integer value. | -112 |

| float | Any floating-point value (in double precision). | 3.14159 |

| number | Any number (either an integer or a float) [7]. | 1.1 |

| string | Any series of characters delimited by double quotes [8]. | "Hello world!" |

| boolean | Either 'true' or 'false'. | 'true' |

| count | A non-negative integer, to mention a number of elements (possibly zero). | 17 |

| percent | A floating-point percentage (alias of float), the value 1.0 corresponding to 100%. | -1.4 |

| json_content | An opaque, stringified JSON representation of a value. | (undisclosed, as meant to be opaque) |

| any | Wildcard type, corresponding to any type (no information given, hence no type checking can be performed in this case). | "I am a value." |

| [7] | Note that some types overlap, notably some of them are special cases of others, like integers and numbers. So, for a given value, multiple type specifications apply (e.g. any will always match). |

| [8] | Mapped as an Erlang binary string, displayed as a basic string. |

Note

Indiscriminate use of the any type is strictly prohibited; typically it should never be used operationally (e.g. when defining actual ports), as it would then be a way of bypassing the type system.

For advanced users: the main use of any lies in the very specific context of "polymorphic units", i.e. units that may be able to process values of all kinds of types.

Derived Types

These types are not built-in, they are to be specifically derived (possibly by the user directly) from other types (which themselves may be built-in or derived).

Type Definition

Often, a new type definition has to be used in several occurrences (e.g. when having different ports that happen to rely on the same type). Rather than repeating the same type definition each time, we recommend that, as soon as a type definition is used more than once for the same purpose, a (derived) type is defined from it and named one time for all - and then referred to as often as needed.

Indeed, being (new) types, derived types have their own name (e.g. my_own_type), knowing that the names of all built-in types are reserved.

So, in order to define a derived type named my_own_type according to any kind of definition (designated here by A_TYPE_DEFINITION), the following syntax shall be used:

my_own_type :: A_TYPE_DEFINITION

For example, if various ports had to handle a number of vehicles, then it may be advisable to introduce a specific type for this purpose, which could be named vehicle_count.

In this case, this derived type would happen to be simply a synonym of the integer built-in type (the vehicle_count type would then be here an alias a the integer built-in one):

vehicle_count :: integer

Then, this new type being defined, ports may use it and specify that they handle channel values of that type, thanks to T: vehicle_count.

Type Constructs

Aliases are of course useful, yet one may have to specify types that are more complex than exact synonyms of the built-in types.

For that, the user can rely on following type constructs, which allow introducing new types, or combinations thereof:

union types, i.e. types that regroup other types and whose values can be of either one of these types

- in the specification of such an union type, the listed types are separated by the pipe (|) character, representing the OR operator

- for example, the union of types T1, T2 and T3 is: T1|T2|T3; the aforementioned number built-in type can be defined as: integer|float

symbol types, each of which being a simple label (a non-empty series of characters delimited by single quotes), like 'my_symbol' or 'Red Alert'; a symbol is both a type and a value, in the sense that defining a symbol is defining a type which happens to have a single value (itself) [9]; moreover type names are themselves symbols (without their single quotes); so defining my_type as, for example, integer, corresponds to the definition of an alias type, a synonym of integer which can be used in other type definitions (such as in [my_type])

enumerated types (a.k.a. enumerations) is a user-defined union of symbols, simply obtained from the two previous constructs; for example a burner_status enumeration type might be defined as:

'burner_enabled' | 'burner_disabled' | 'burner_on_operation'

and a value of that type (a symbol) may be, for example: 'burner_disabled'

One can see that the boolean type is actually nothing but a 'true'|'false' enumeration.

Defining a single-type enumeration corresponds to defining a symbol type.

| [9] | Symbols are mapped to Erlang atoms. |

Polymorphic Types

Finally, in addition to all the atomic types (built-in or derived) presented above, following built-in polymorphic types (types that depend on others) are supported as well:

- list of type T, noted as list(T) or [T]: any kind of (proper, homogeneous) list (empty or not), represented between square brackets, to account for a variable-size sequential container containing values of type T

- e.g. list(integer) and [integer] denote the same type, a list containing any number of integers; values of that type can thus be: [4,-17], [], etc.

- list() refers to any kind of list (alias of list(any))

- tuple containing elements of types T1, T2, T3, etc., noted as {T1,T2,T3,...}: any kind of tuple (fixed-size container, homogeneous or not), delimited by curly braces

- e.g. a value of the {burner_status,float,[bool]} type might be {'burner_on_operation',14.7,['false','false']}

- tuple(T) refers to tuples whose elements are all of type T, and whose number is not specified (e.g. {2,46,5} is of type tuple(integer)), while tuple() refers to any kind of tuple

- in later versions: associative tables, whose keys are of type Tk and values are of type Tv, noted as table(Tk,Tv)

Note that recursive types (e.g. a type tree being defined as {tree,tree,node_content}), are, at least currently, not allowed (they can be expressed yet no specific support for them is provided).

Implementation-wise

The dataflow infrastructure includes a TypeServer, which tracks statically (i.e. on a per-class level) or dynamically (for any dynamically-created port) the type declarations and uses, to provide a first, very basic support for typing enforcement.

Unit of a Value

A value of a given type (typically a float) can actually correspond to quantities as different as meters and kilowatts per hour.

Therefore units shall preferably be specified alongside with values, and a language to express these units must be retained. The U in SUTC stands for this unit information.

One should refer to the documentation of the Myriad layer [10] for a description of how units can be specified, compared, checked and used.

| [10] | Please refer to the Description of the Management of Units section, in the technical manual of the Myriad layer (in Ceylan-Myriad-Layer-technical-manual-english.pdf). |

In a dataflow, the unit of the values that will be held by a port shall preferably be specified when declaring that port. This is done thanks to a string, prefixed with "U:" (e.g. "U: kW.h", "U: g/Gmol.s^-2" or "U: {mm,mm,mm}" for a 3D vector in millimeters).

Specifying the unit of a scalar value implies declaring its type as float.

If, for a value, no unit is given, then its type, as discussed in Type of a Value, shall be specified.

Semantics of a Value

Specifying the type and unit of a value is certainly useful, yet it would generally be insufficient to convey its meaning, i.e. to express how that value shall be interpreted.

For example, knowing that a port accepts floating-point values in kilojoules does not tell whether this value corresponds to an energy demand, an actual consumption or a production.

Therefore this domain-specific information shall be specified separately. It is to be done thanks to the specification of a symbol (similar to a string, corresponding to an Erlang atom), prefixed with "S:", standing for semantics (which is the S in SUTC). For example: "S: 'security credentials'" or "S: 'energy_demand'".

We recommend that semantics are specified according to a well-defined, standard format: RDF (standing for Resource Description Framework).

RDF statements (potentially expressed as RDF triples) about subjects (e.g. a block or a port) clarify the intents (made them explicit and expressed in an uniform way) and may allow the use of tools able to perform queries and inference. This may enable, in the future, the automatic checking and even generation of proper dataflows.

Typically a port semantics is then a subject in RDF parlance, like in:

S:'http://foobar.org/urban/1.1/energy/demand'

where:

- the normalising organisation is designated by its domain name foobar.org

- it published a urban ontology, whose version in use here is 1.1

- it addresses potentially multiple fields of interest, including the one of energy

- one sub-topic of which is the demand (of energy)

As a result, the full chain (the output port, the channels, the value itself and the related input ports) can perform a basic check of the semantic consistency for each exchange over the dataflow, and have an extra chance of detecting and rejecting any erroneous port connection (even if in technical terms, i.e. in terms of typing and unit, it may look perfectly legit).

Currently a minimum lexicographic distance (the Levenshtein one) is enforced (by the SemanticServer of the dataflow infrastructure) between any two semantic elements, so that any spelling mistake can be more easily detected.

Generally the channel is shown as bearing the semantics, implying that this formalised meaning is shared by the corresponding output port, the associated input ports and by the values that they exchange.

Constraints Applying to a Value

The C in SUTC stands for this constraints information.

They allow to specify a set of rules by which the value must abide.

Note

Unlike most of the other meta-data (e.g. semantics or type), constraints are not considered as being intrinsic to a value; they are generally seen as a property (on values) that is enforced at the port level.

The following constraints can be mixed and matched:

- {greater_than,G} means that the (scalar) value must be greater than, or equal to, the number G

- {lower_than,L} means that the (scalar) value must be lower than, or equal to, the number L

- {between,A,B} means that the (scalar) value must be greater than, or equal to, the number A and lower than, or equal to, the number B

- {in,L} means that the value must be an element of the list L

- positive means that the (scalar) value must be positive (possibly null)

- strictly_positive means that the (scalar) value must be strictly positive (null not allowed)

- negative means that the (scalar) value must be negative (possibly null)

- strictly_negative means that the (scalar) value must be strictly negative (null not allowed)

- non_null means that the (scalar) value must not be null (strictly positive or negative, zero not allowed)

For example, constraints applying to a value could be:

C: [ {between,2020,2040}, {in, [1989,2021,2030,2988]} ]

Note

As mentioned, for all numerical comparisons (e.g. greater_than), the value of interest is expected to be a (scalar) number.

Otherwise (e.g. the value is a triplet, or the value is not a number), the associated constraint is considered as not satisfied.

All constraints have to apply (as if they were associated by AND operators). The previous example would thus allow only two possible values, 2021 and 2030.

Various additional kinds of constraints may be supported, based on encountered needs.

Constraints are currently parametrised by constants (e.g. {greather_than,10.0}); maybe in the future they could also accept references onto other local ports (e.g. to compare their values or base some constraints on operations, like sum, performed on their values).

Accuracy of a Value

This may be the next value-level metadata to be handled by the dataflow infrastructure.

Depending on various factors like data quality and numerical errors (e.g. floating-point rounding), the computed values might show a good precision and many digits, yet a poor accuracy.

The first step to prevent it is to measure how accurate a computation is. This can be evaluated thanks to relative error and ulps (for units in the last place).

So an accuracy may be associated to each value exchanged over the dataflow, and it may then be updated by each processing unit relying on it.

By default accuracy is best measured in terms of relative error, as, if ulps are the most natural way to measure rounding error, they are less suitable to analyse the error caused by various formulas.

Anyway, often only the order of magnitude of rounding errors is of interest, and ulps and relative errors may be used interchangeably since their magnitude differ by at most a constant factor, the radix, typically equal to 2 (binary representation), or less frequently 10 (decimal one).

Another measure could be the "precision", once defined as the number of bits used to represent the significand of a floating-point number. Libraries like MPFR can be given a target, arbitrary precision and may enforce it (hence we would expect the accuracy of the corresponding values to be constant across the corresponding ports).

Each project is free to retain its own conventions regarding how the accuracy is quantified (usually as a floating-point number). The dataflow infrastructure provides the mechanisms to keep track of it, and, in processing units, update it. An accuracy specification is to be prefixed with "A:", like in "A: 8".

Should no accuracy be used for a given value, it should be replaced by the 'unknown_accuracy' atom (which is the default).

The accuracy could be also translated as a confidence interval, i.e. an interval that covers an unknown parameter with probability P=1-alpha, where P is the confidence level, and alpha should be as close as possibly to 0 (typical values of P: 0.95, 0.99 or 0.999) thanks to a sufficiently large number of samples.

As no general consensus exists about accuracy, it has not been included among the usual metadata associated to values. In the future this could added, accuracy becoming the A of SUTCA.

Wrapping Up About Values

So an output port may send a notification to a set of input ports, with the following information being associated:

- a semantics, like in S: 'energy_demand'

- a unit, like in U: kW.h

- a type, like in T: float

- constraints, like in C: [{lower_than,100}]

- an accuracy, like in A: 11.0, to be understood here as the number of bits for the precision of the significand

- a value, like in 6.7121

- a timestamp, like in {117,3}, i.e. tick offset #117, diasca 3

- the port identifier of the sender

The semantics, the unit, the type carried by endpoints and the sender port identifier are exchanged and checked at the channel creation, i.e. when the input port is linked to its output one.

The unit of the value, its associated constraints, its accuracy, its actual value and its timestamp are checked and sent to the input port each time it is triggered by its output port.

Logic of the Dataflow Block Activation

We saw that a key element of a dataflow lies in its blocks, notably in its processing units.

Note

Blocks are either dataflow objects or dataflow units. We will discuss here mainly of the latter (i.e., of units), as the activation of a dataflow object offers no flexibility: it will be activated iff at least one of its input ports has been assigned, leading to its corresponding attribute(s) being set, and to the associated outport(s) being in turn assigned.

For a given dataflow block, it must be decided:

- at which logical step the block is to be activated, i.e. when the activation of a block shall be examined

- on which additional condition(s) it shall be activated, i.e. how in practice the block update shall be determined as having to be triggered

- what results from such an activation, i.e. what are the operations this block should then perform

Such an activation translates to the execution of the activate/1 method of that block (at this point, it is most probably a processing unit). The role of this method is to be the place where the unit defines its actual processing; for that, the unit most probably overrode the corresponding default do-nothing implementation.

During this processing, as for any actor oneway, the unit is free to perform computations, to send actor messages and to operate state changes. This includes notably reverting any of its input ports to the unset state, and activating any of its output ports.

Now that it has been determined what an activation entails (pretty much anything within the degrees of freedom of an actor), the conditions ruling when an activation shall occur are to be specified. Various policies are available for that.

For a given activation policy, these conditions should only depend on the readiness of the input ports of that unit, and of its state.

Even if a given processing unit might define its own activation rules, the set of built-in activation policies described below should be sufficient for most uses.

In all cases, under the hood the unit will be notified thanks to an actor message that one of its input ports has been triggered, knowing that during a diasca any number of such messages may be received (indeed a unit may have multiple input ports; moreover, even if it may not be usual practice, an upstream block might have triggered one of its output ports more than once) and then reordered before being processed on the next diasca.

The 'Activate On New Set' Policy

The first built-in activation policy consists in updating the unit when at least one of its input ports went from unset to set.

This policy, named activate_on_new_set, will activate the unit at most once per diasca, at the one immediately following the diasca at which these input ports were triggered, no matter of how many input ports were triggered on the previous diasca nor on how many times they were each triggered.

An (unordered) list of input port triggers, together with the corresponding values then set, will be available to the activate/1 method when it will be automatically executed.

Either a bulk update may follow (the unit taking them into account as a whole), or it may perform a fold on that list to react in turn to each trigger (to emulate the case where they would be received and processed one after the other [11]).

| [11] | Note that, as all actor messages, the triggers have been reordered by the engine according to the simulation mode. |

It is up to the unit to reset the input ports (i.e. to set each of them back to the unset state) when deemed appropriate.

This Activate On New Set policy (sometimes shortened as the "On New" policy) is graphically symbolized as an arrow, to denote that any update of an input port directly triggers the associated unit computation.

The 'Activate When All Set' Policy

The second built-in activation policy, named activate_when_all_set, is to update the unit if and only if all of its input ports have been set: each time an input port is triggered, this policy automatically determines if it was the last one still unset and, if yes, it executes the activate/1 method.

Note

This policy used also to take care, once that method had been executed, of automatically setting back all input ports to their unset state.

As at least some models rely on "stable" inputs (inputs that vary infrequently, if ever - and thus may be set only once, but read multiple times), we preferred disabling that mechanism. So, now, in all cases, input ports are never automatically unset.

This Activate When All Set policy (sometimes shortened as the "When All" policy) is graphically symbolized as a star resembling to a lock, to denote that no associated unit computation will take place until all input ports have been enabled (i.e. are set).

Custom Activation Policies

Some units may require, under rare circumstances, a custom policy, i.e. a policy of their own that does not match any of the built-in ones.

For example source units, i.e. units not having any input port, can be defined, but of course then none of the policies above can apply (as they can never be triggered). Nevertheless such source units are typically needed in order to bootstrap the processing of a dataflow.

To solve this, rather than forcing the definition of at least one "dummy" input port per unit, all units can also be explicitly triggered: they can rely on their activateExplicitly/2 actor oneway for that, in charge of calling their activate/1 oneway as other policies do.

This policy may for example also be used to account for units having fixed, active temporalities. A daily-activated unit may schedule itself every 24 hours (declaring such a regular spontaneous scheduling, during which it may activate its output ports), while another unit may be ruled per-hour.

So dataflows can federate mixed temporalities, knowing that the use of this policy of explicit activation is fully optional (as by default a dataflow is fully passive and is only driven by changes in its input ports) and shall be regarded only as a last resort, should the built-in policies be insufficient.

This Custom policy is graphically symbolized as a sheet of paper, to denote that the unit activation is driven by a freely chosen user-specified logic.

On Dataflow Objects

We asserted previously that the most common form of dataflow block is the processing unit; the other major form is the dataflow object, discussed here.

Indeed, if dataflow units allow to describe the computations that shall be performed, generally they have to rely on the structure of the simulated world in order to feed their computations with relevant data.

Holding these information, and possibly making them change over time, is the purpose of the dataflow objects. They are plain, standard (Sim-Diasca) actors, except that they may define dataflow attributes, i.e. their own state attributes that can be involved in dataflows.

To a dataflow attribute is indeed generally associated a pair of ports, an input one and an output one. These ports allow to bridge the gap between two worlds:

- the one of the multi-agent, dynamic, loosely coupled actors, serving the purpose of describing a disaggregated target system and its evolution

- the one of the (mostly statically connected) dataflow units, in charge of performing computations over a target system

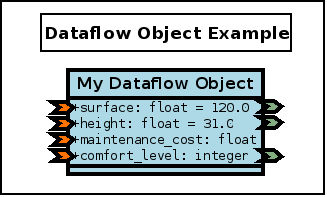

A dataflow object is represented with the light-blue background that is common to all dataflow blocks, while each of its attributes is associated to an input and/or an output port (typically both), like in:

In this example, all attributes are standard, "bidirectional" attributes (they can be read and/or written by other dataflow blocks), except the maintenance cost, which is a "terminal" attribute (in the sense that it can be set, yet cannot be read by other blocks of the dataflow).

On Model Assemblies

Defining the Notion of Assembly

As seen already, within simulations, the target system (e.g. a city) is translated into a set of instances of dataflow objects of various types (e.g. Building, Household, etc.), on which models - themselves made of a set of instances of processing units of various types (e.g. EnergyDemand, PollutionExhausted, etc.), complemented with at least one unit manager - are to operate.

The (generally interconnected) set of models involved into a single simulation is named a model assembly.

Assemblies may comprise any number of models: generally at least one, most often multiple ones, since the purpose of this dataflow approach is to perform model coupling.

Let's from now adopt the convention that a model name is a series of alphanumerical characters (e.g. FoobarBazv2) and that its canonical name is the lowercase version of it (e.g. foobarbazv2).

Ad-hoc Assemblies

One option is that a FoobarBazv2 model is directly integrated in a dataflow thanks to an ad-hoc simulation case for a target assembly, a case whose name could be freely chosen (e.g. my_foobarbazv2_case.erl).

Then, in this simulation case (accounting for the corresponding assembly), all relevant FoobarBazv2-specific settings would have to be directly specified (e.g. the elements to deploy for it, the unit managers it is to rely on, etc.); note that these model-specific information would be somewhat hardcoded there.

Dynamic, Composable Assemblies

Alternatively, rather than potentially duplicating these settings in all cases including that model, one may define instead a foobarbazv2-model.info file (note the use of its canonical name) that would centralise all the settings relevant for that model, in the Erlang term format [12].

For example it could result in this file having for content (note that these settings can be specified in any order):

% The elements specific to FoobarBazv2 that shall be deployed: { elements_to_deploy, [ { "../csv/Foobar/Baz/version-2", data }, { "../models/Foobar-Baz", code } ] }. % To locate any Python module accounting for a processing unit: { language_bindings, [ { python, [ "my-project/Foobar-Baz/v2" ] } ] }. % Here this model relies on two unit managers: { unit_managers, [ class_FoobarBazEnergyUnitManager, class_FoobarBazPollutionUnitManager ] }.

| [12] | Hence this file will simply store a series of lines containing Erlang terms, each line ending with a dot (i.e. the format notably used by file:consult/1). We preferred this format over JSON as the scope of these information is strictly limited to the simulation, and being able to introduce comments here (i.e. lines starting with %) is certainly useful. |

Defining the needs of a model as such enables the definition and use of dynamic assemblies, that can be freely be mixed and matched.

Indeed, should all the models of interest have their configuration file available, defining an assembly would just boil down to specify the names of the models it comprises (e.g. FoobarBazv2, ACME and ComputeShading; that's it).

Envisioned Extensions

In the future, the disaggregated view of the simulation regarding the target system (decomposing it based on dataflow objects) could be the automatic byproduct of the gathering of the models within an assembly: each model would declare the dataflow objects it expects to plug into and the corresponding attributes (with metadata), then an automated merge would check that this coupling makes sense ( and would generate a disaggregated view out of it.

In said model-specific configuration file, we could have for example:

{ dataflow_objects, [ { class_Building, [ % Name of the first attribute: { surface, % Corresponding SUTC metadata: % First the semantics: [ "http://foobar.org/surface" ], % Then the unit, type (no constraint here): "m^2", float }. { construction_date, ... } ] }, { class_Household, [ { child_count, ... } ] } ] }.

Then, before the start of the simulation, each model of the assembly could be requested about its dataflow objects, and they could be dynamically defined that way, if and only if, of each attribute of each dataflow object mentioned, all definitions agreed (equality operation to be defined for the SUTC metadata).

A More Complete Example

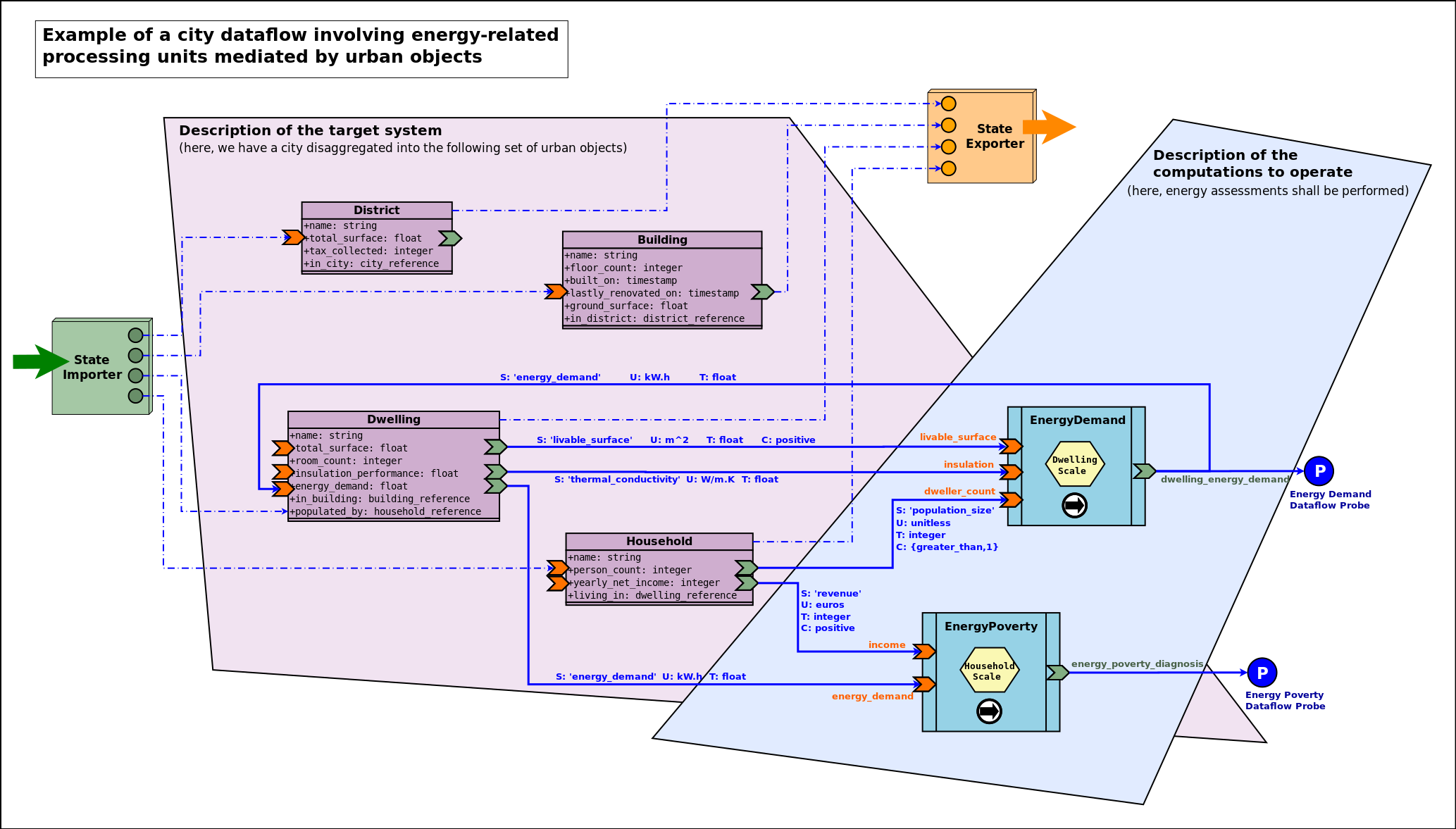

Here we took the case of an hypothetical modelling of a city, in which the target system happens to be disaggregated into districts, buildings, etc.

A View Onto a Theoretical Simulation

Here we propose to enforce an additional, stricter convention, which is that no two computation processing units shall interact directly (i.e. with an output port of one being linked to an input port of the other); their exchanges shall be mediated by at least one dataflow object instead. As a consequence a unit interacts solely with the target system.

Respecting such a convention allows to uncouple the processing units more completely: one can be used autonomously, even if the other is not used (or does not even exist).

As a result, this example simulation consists on the intersection of two mostly independent planes, the one of the target system (in light purple, based on dataflow objects) and the one of the computations applied on it (in light blue, based on computation units).

This intersection is implemented thanks to dataflow objects and the related channels (in blue), since they are making the bridge between the two planes.

One can also notice:

- two dataflow probes (on the right), should specific results have to be extracted from the dataflow (read here from the output ports of some blocks)

- external state importer and exporter, supposing here that this simulation is integrated into a wider computation chain (respectively in charge of providing an input state of the world at each time step, and, once evaluated and updated by the dataflow, of reading back this state and possibly transferring it to other, third-party, computational components; they are specialised versions of experiment entry and exit points).

We can see that we have still here a rather high-level, abstract view of the dataflow: types are mentioned (e.g. Building) instead of instances (e.g. building_11, building_12, etc.), and managers (discussed later in this document) are omitted.

A View Onto an Actual Simulation

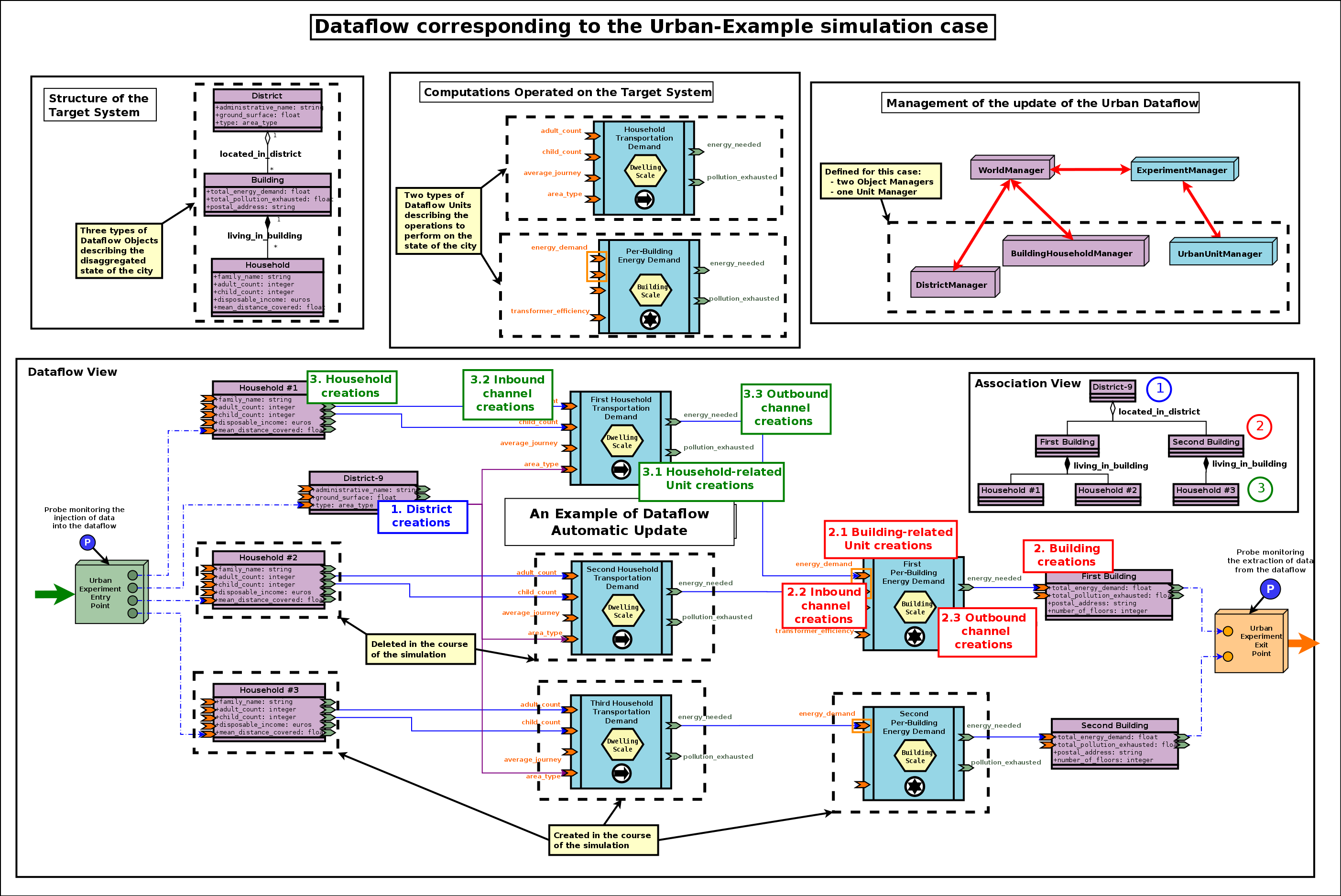

The following instance-level diagram describes the simulation case whose sources are available in the mock-simulators/dataflow-urban-example directory:

This case demonstrates the following elements:

- two specific entry and exit experiment points

- two types of processing units, one of which relies on an (input) port iteration

- four unit instances

- two unit activation policies

To run that example:

$ cd mock-simulators/dataflow-urban-example/src

$ make batch

Alternatively, to run a display-enabled version thereof, one may run make run instead.

Developing Dataflow Elements in Other Programming Languages

The dataflow infrastructure, like the rest of Sim-Diasca, uses a single implementation language, Erlang, which may be readily used in order to implement, notably, dataflow processing units.

However it may be useful to introduce, in one's simulation, dataflow blocks (e.g. a set of processing units corresponding to at least one model) that are implemented in other programming languages, especially if they are for the most part already developed and complex: integrating them in a dataflow might involve less efforts than redeveloping them.

To ease these integrations, language bindings have been defined, currently for the Python and the Java languages - still to be used from GNU/Linux. These bindings provide APIs in order to develop dataflow constructs in these languages and have them take part to the simulations.

Note that a language binding often induces the use of a specific version of the associated programming language (e.g. the Python binding may target a specific version of Python). We tend to prefer the latest stable versions for these languages (as they are generally more stable and provide more features), however in some cases some helper libraries that might be proposed for inclusion by models (for their internal use) may not be updated yet.

In that case, should these extra dependencies be acknowledged, a language downgrade may be feasible, until these libraries are made compliant again with the current language version.

One should refer to the Sim-Diasca Coupling HOWTO for further information regarding how third-party code can be introduced in a Sim-Diasca simulation, whether or not it is done in a dataflow context.

Python Dataflow Binding

General Information

This binding allows to use the Python programming language, typically to write dataflow processing units.

The Python version 3.6.0 (released on December 23rd, 2016) or more recent is to be used. We recommend to stick to the latest stable one (available here). Let's designate by A.B.C the actual version of Python that is used (e.g. A.B.C=3.6.0).

A Python virtual environment, named sim-diasca-dataflow-env, is provided to ease developments.

Binding Archive

All necessary binding elements (notably the virtual environment and the sources) are provided in a separate archive (which includes notably a full Python install), which bears the same version number as the one of the associated Sim-Diasca install [13].

| [13] | The sources of this binding in the Sim-Diasca repository can be found in sim-diasca/src/core/src/dataflow/bindings/python/src (from now it is called "the binding repository"). |

This binding archive has to be extracted first:

$ tar xvjf Sim-Diasca-x.y.z-dataflow-python-binding.tar.bz2

$ cd Sim-Diasca-x.y.z-dataflow-python-binding

Python Virtual Environment

It should be ensured first that pip (actually pipA.B, like in pip3.6) and virtualenv are installed.

For example, in Arch Linux (as root), supposing that a direct Internet connection available:

$ pacman -Sy python-pip $ pip install virtualenv

We will make here direct use of the virtual environment that will be obtained next; one may alternatively use virtualenvwrapper for easier operations.

Recommended: Getting this Virtual Environment Directly from the Binding Archive

The virtual environment corresponding to this binding is located at the root of the archive, in the sim-diasca-dataflow-env tree.

It can be used as it is, without further effort.

Alternate Mode of Operation: Recreating this Virtual Environment

If using directly the binding archive is the recommended approach, in some cases one may nevertheless want to recreate the virtual environment by oneself.

Then, as a normal user, an empty environment shall be created, activated and populated with the right packages:

$ virtualenv sim-diasca-dataflow-env --python=pythonA.B $ source sim-diasca-dataflow-env/bin/activate $ pip install -r sim-diasca-dataflow-env-requirements.txt

Note

Care must be taken so that the same A.B Python version as the one in the archive is specified here.

We hereby supposed that the Bash shell is used. If csh or fish is used instead, use the activate.csh or activate.fish counterpart scripts.

Using this Virtual Environment

To begin using it, if not already done, one should activate it first:

$ source sim-diasca-dataflow-env/bin/activate

Then all shell commands will use a prompt starting with "(sim-diasca-dataflow-env)" to avoid that the user forgets that this environment is enabled.

Packages provided in this environment shall be managed thanks to pip.

The list of the packages used by default by the binding is maintained in the sim-diasca-dataflow-env-requirements.txt file (available at the root of both the binding archive and repository) [14].

| [14] | It is obtained thanks to: pip freeze > sim-diasca-dataflow-env-requirements.txt. |

From now on, any additional package that one installs (using pip) will be placed in this sim-diasca-dataflow-env directory, in isolation from the global Python installation.

The list of the packages currently used (in the context of this virtual environment) can be obtained thanks to:

$ pip list

Once finished with it, the virtual environment can be deactivated with deactivate (now directly available from the PATH):

$ deactivate

Sources of the Binding

The binding itself, relying for its execution on the aforementioned virtual environment, is a regular (as opposed to a namespace one) Python package named sim_diasca_dataflow_binding, located under the same name at the root of the binding archive [15].

| [15] | As mentioned before, its sources in our repository are located in the sim-diasca/src/core/src/dataflow/bindings/python/src directory. |

Use of the Binding

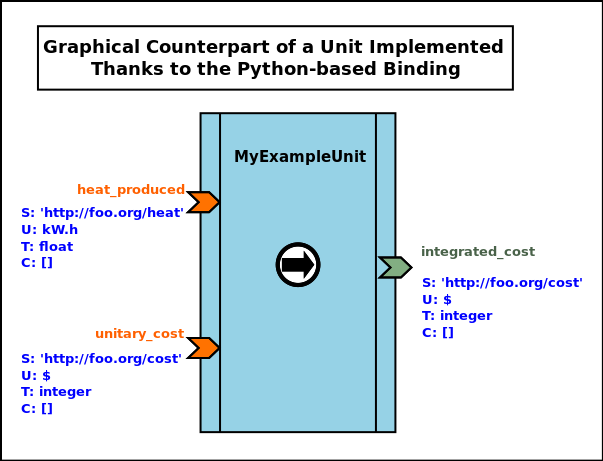

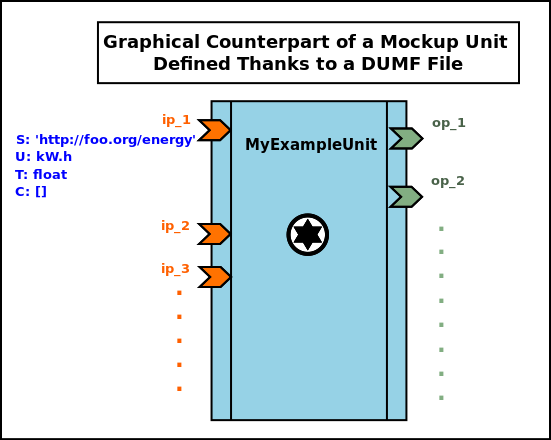

Let's take the example of a user-defined processing unit, let's say in my_example_unit.py, that may be graphically described as:

Its corresponding Python-based full implementation may be:

from sim_diasca_dataflow_binding.common import trace from sim_diasca_dataflow_binding.common import error from sim_diasca_dataflow_binding.dataflow import unit class MyExampleUnit(unit.ProcessingUnit): """A unit computing heat and fees; 2 input ports, 1 output one.""" def __init__(self,name:UnitName,relative_fee:float): # A static, constant fee applied to some costs: self.fixed_fee = 115.0 # An instance-specific proportional fee: self.relative_fee = relative_fee my_input_specs = [ InputPortSpec('heat_produced','http://foo.org/heat','kW.h','float'), InputPortSpec('unitary_cost','http://foo.org/cost','$','integer') ] my_output_specs = [ OutputPortSpec('integrated_cost', 'http://foo.org/cost','$','float') ] unit.ProcessingUnit.__init__(self,name,my_input_specs, my_output_specs,ActivationPolicy.on_new_set) def activate(self) -> None: """Automatically called by the dataflow, as requested by the selected activation policy.""" input_cost_port='unitary_cost' # 'Activate On New Set' policy, hence may be unset: if self.is_set(input_cost_port): self.debug("Computing fees.") cost = self.get_input_port_value(input_cost_port) new_cost = self.apply_fees(cost) self.set_output_port_value('integrated_cost', new_cost) # The domain-specific logic is best placed outside of the # dataflow logic: def apply_fees(self,cost:float) -> float: """Applies all fees to specified cost.""" return self.fee + cost * self.relative_fee

For reference, in sim_diasca_dataflow_binding/dataflow/unit.py, we may have the following definitions (used in our example unit above):

from enum import Enum # Type aliases (mostly for documentation purposes): Semantics = str Unit = str Type = str Constraint = str BlockName = str PortName = str InputPortName = PortName OutputPortName = PortName PortValue = Any # Taken from https://docs.python.org/3/library/enum.html#functional-api: class AutoNumberedEnum(Enum): def __new__(cls): value = len(cls.__members__) + 1 obj = object.__new__(cls) obj._value_ = value return obj class ActivationPolicy(AutoNumberedEnum): on_new_set = () when_all_set = () custom = () class InputPortSpec(): def __init__(self, name:InputPortName, semantics:Semantics, unit:Unit, type:Type, constraints=List[Constraint] ): [...] class OutputPortSpec(): [...] class Port(): [...] class InputPort(Port): [...] class OutputPort(Port): [...] class ProcessingUnit(trace.Emitter): """Base, abstract, dataflow processing unit.""" def __init__(self, name:UnitName, input_specs:List[InputPortSpec], output_specs:List[OutputPortSpec], activation_policy:ActivationPolicy): # Class-specific attribute declaration: # - input_ports={} : Mapping[InputPort] # - output_ports={}: Mapping[OutputPort] # - activation_policy: ActivationPolicy trace.Emitter.__init__(self,name,category="dataflow.unit") self.trace("Being initialised.") self.register_input_ports(input_specs) self.register_output_ports(output_specs) self.activation_policy=validate_activation_policy(activation_policy) def register_input_ports(self, specs:List[InputPortSpec]) -> None: [...] def register_output_ports(self, specs:List[OutputPortSpec]) -> None: [...] def validate_activation_policy(policy:Any) -> ActivationPolicy: [...] def is_set(self,name:InputPortName) -> bool: """Tells whether specified input port is currently set.""" [...] def get_input_port_value(self,name:InputPortName) -> PortValue: """Returns the value to which the specified input port is set. Raises ValueNotSetError if the port is not set. """ [...] def set_output_port_value(self,name:OutputPortName,PortValue) -> None: [...] def activate(self) -> None: """Evaluates the processing borne by that unit. Once a unit gets activated, it is typically expected that it reads its (set) input ports and, based on their value and on its own state, that it set its output ports accordingly. """ pass

Binding Implementation

The Python dataflow binding relies on ErlPort for its mode of operation.

Please refer to the Sim-Diasca Technical Manual to properly install this binding.

Java Dataflow Binding

This binding allows to use the Java programming language, typically to write dataflow processing units.

The Java version 8 or higher is recommended.

Note

Unlike the Python one, the Java Binding is not ready for use yet.

Other Language Bindings

A low-hanging fruit could be Ruby, whose binding could be provided relatively easily thanks to ErlPort.

Rust could be quite useful to support as well.

More Advanced Dataflow Uses

Dynamic Update of the Dataflow

One may imagine, dynamically (i.e. in the course of the simulation):

- creating or destroying blocks

- creating or destroying channels

- updating the connectivity of channels and blocks

- creating or destroying input or output ports of a block

This would be useful as soon as the target system is itself dynamic in some way (e.g. buildings being created in a city over the years, each building relying on its associated computation units - which thus have to be dynamic as well, at least with varying multiplicities).

Moreover, often all the overall layout cannot be statically defined, and the dataflow as a whole has to be dynamically connected to the components feeding it or waiting for its outputs (e.g. a database reader having to connect in some way to some input ports) - so some amount of flexibility is definitively needed.

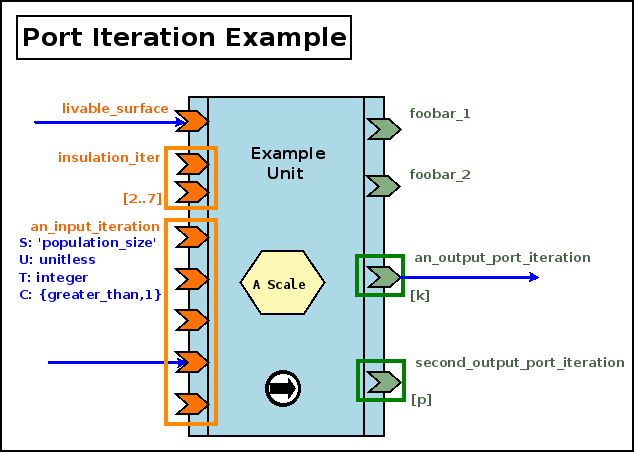

Iteration Specification & Iterated Ports

Usage Overview

In some cases, a given type of unit may support instances that can have, each, an arbitrary number of ports relying on identical metadata (i.e. ports that have to obey the exact same specifications).

An example of that is a processing unit aggregating a given metrics associated to each building of a given area: each instance of that unit may have as many input ports (all having then exactly the same metadata) as there are different buildings in its associated area, and the class cannot anticipate the number of such ports that shall exist in its various instances [16].

| [16] | Not to mention that buildings may be created and destroyed in the course of the simulation, so, even for any given instance, the number of ports may have to change over simulation time... |

Supporting iterated ports spares the need of defining many ports that would happen to all obey the same specification; typically, instead of declaring by hand an energy_demand port and similar look-alike ports (that could be named energy_demand_second, energy_demand_third, etc.) with the same settings, an energy_demand(initial,min,max) port iteration can be specified.

This leads, for each instance of this unit, to the creation of initial different iterated ports, all respecting the energy_demand port specification.

At any time, for each of these unit instances, there would be at least min instances of such ports, and no more than max - that can be either a positive integer, a named variable or the * (wildcard) symbol, meaning here that a finite yet unspecified and unbounded number of these ports can exist.

More precisely, in a way relatively similar to the UML conventions regarding multiplicities, for a given port iteration one may specify either a fixed number of iterated ports, or a range:

| Multiplicity | Examples | Meaning: for this iteration, at all times there will be: |

|---|---|---|

| Fixed constant | 7 | Exactly 7 iterated ports |

| Variable name | n | Exactly n iterated ports |

| * | * | Any number of iterated ports |

| Min..Max | 0..4,a..b, 2..* | Between Min and Max iterated ports (bounds included) |

In a given dataflow, variable names (e.g. m, n, o, etc.) are expected to match. For example, if the variable n is referenced more than once in the dataflow, then all its occurrences refer to the same value (which is let unspecified in the diagram).

Iterated ports are automatically named by the runtime (e.g. energy_demand_iterated_1, energy_demand_iterated_2); they are standard ports and thus their name shall remain an identifier [17].

| [17] | This is why the runtime enforces the single restriction that applies to port names, which is that the _iterated_ substring cannot exist in such a user-defined name. |

Graphically, the set of ports corresponding to an iteration is represented as a rectangle enclosing these iterated ports (when representing actual instances, the rectangle may be empty); multiplicities are to be specified between brackets (to distinguish them from the iteration name) and preferably at the bottom of the associated rectangle.

All these elements shall be of the same color as the one of the port. Any common metadata shall preferably be listed once.

An example of these conventions is the following processing unit, featuring two input and and two output port iterations:

We can see above that, at any time, the first input iteration shall have between 2 and 7 (included) iterated ports, while the second input iterations is detailed graphically and has exactly 5 iterated ports ([5] is thus implied).

As for the output iterations, both have a certain number of iterated ports, and, as k is different from p, their respective counts of iterated ports may not match (e.g. we can have k=2 and p=0).

In Terms of Implementation

An iteration specification enables the creation of multiple (input or output) ports of the same type, each of these instances being designated as an iterated port (technically an iterated port is nothing but a standard port).

For example, defining an iteration specification of 3 instances named foobar will result in the creation of 3 actual (iterated) ports, each complying to this specification, and named foobar_iterated_1, foobar_iterated_2 and foobar_iterated_3 [18].

| [18] | As mentioned, once created, these three ports will be fully standard ports; the _iterated_ substring helps avoiding that the names of iterated ports clash with other ports (it convey no specific meaning as such). |

By default, port specifications are not iterated: in implementation terms, the is_iterated field of a port specification is set to false.

To enable the creation of iterated ports, the is_iteration field of the corresponding port specification shall be set to either of these three forms:

- {Initial,{Min,Max}} where Initial is the initial number of iterated ports to be created according to this specification, and Min and Max are respectively the minimum and maximum counts of corresponding iterated ports that are allowed to exist

- then the runtime will create the corresponding initial number of instances (whose name is suffixed as mentioned with an incremented number starting from 1), and ensure that, even in the presence of runtime port creations, the number of the corresponding iterated ports will remain within specified bounds

- Initial and Min are positive integers, while Max can also be set to the unbounded atom to allow for an unlimited number of such iterated ports; of course Initial must be in the {Min,Max} range

- {Initial,Max}, which is a shorthand of {Initial,{_Min=0,Max}}

- Initial, which is a shorthand of {Initial,{_Min=0,_Max=unbounded}}

Domain-Specific Timestamps

By default, engine ticks directly translate to a real, quantified simulation time: depending on the starting timestamp and on the selected simulation frequency, a given tick corresponds to an exact time and date in the Gregorian calendar (e.g. each month and year lasting for the right number of days - not respectively, for example, 30 and 365, a simplification that is done in some cases in order to remain in a constant time-step setting).

However, in some simulations, models are ruled by such a strange simplified time [19], so domain-specific timestamps may be useful. Their general form is {timescale(),index()}, where timescale() designates the selected time granularity for the underlying channel (e.g. constant_year, month_of_30_days) and index() is a counter (a positive integer corresponding to as many periods of the specified time granularity).

| [19] | Even if this oversimplification just by itself yields already significant relative errors (greater than 10%). |

For example, in a simulation some models may be evaluated at a yearly timescale, while others would be at a daily one. Considering that initial year and day have been set beforehand, a timestamp may become {yearly,5} or {daily,421}. This could be used to check that connected ports have indeed the same temporality (e.g. no yearly port linked to a daily - a timescale convertor unit needing to be inserted in that case), and that none of such timescale-specific timesteps (i.e. index) went amiss in the channel.

Usefulness of Cyclic Dataflows?

One can notice that cyclic dataflow graphs are allowed by this scheme based on input and output ports, and that even "recursive dataflow objects" (i.e. dataflow objects having one of their output port connected to one of their input ones) can exist.

Of course some convergence criterion is needed in order to avoid a never-ending evaluation.

Experiments

As mentioned in the Experiment Definition section, an experiment is the top-level abstraction in charge of driving the computations applying to a simulated world.

Purpose of Experiment Endpoints

Depending on the project, it may be convenient for a given experiment relying on any number of dataflows to define a pair of components in charge of triggering and terminating its evaluation (typically once a specified number of steps were performed), as points of entry and exit, respectively.

For that, base classes are provided (ExperimentEntryPoint and ExperimentExitPoint) that are meant, if needed, to be subclassed on a per-project basis.

The purpose of these (optional) endpoints is to drive multiple dataflow instances, possibly on par with some external software (e.g. any overall integration platform).

Their impact in the progress of an experiment is discussed next.

Experiment Progress

In terms of logical ordering, the usual course of action of a dataflow-oriented simulation discussed here is [20]:

- a new simulation tick T begins: the experiment entry point (which is an actor) is scheduled

- a synchronisation stage, detailed in the next sections, occurs: the dataflows are suspended, and the state of the simulated world is updated first, then the computations that shall be applied on it are modified accordingly

- then the dataflows are resumed, and it usually triggers (based on intermediate logical moments, i.e. diascas) in turn (source) dataflow objects and/or processing units

- this may trigger cascading updates of dataflow elements over diascas

- once all evaluations are done, the experiment exit point (another actor) is to wrap up all information and perform any related tick-termination operation (e.g. sending an update regarding the newer state of the simulated world to a third-party platform)

- then the next planned tick begins (usually this corresponds to T+1), and the process continues until a termination criterion is met (typically a final timestamp is reached, or the simulation is notified that no changes are to be expected anymore)

| [20] | To shed some light on the related implementation (as the technical organisation is a bit different), please refer to the Scheduling Cycle of Experiments. |

As a result, the processing of a given timestep may boil down to an overall three-step process:

- synchronisation of the state of the simulation from an external source (thanks to a state importer - generally an experiment entry point)

- then evaluation of the corresponding dataflow

- then update of an external target, based on the resulting state of the simulation (thanks a state exporter - generally an experiment exit point)

These external source and target may actually correspond to the same data repository, held by a more general platform.

The rest of that HOWTO explained with great detail how step B is tackled. We will thereafter focus thus on step A, knowing that step C in many ways is a reciprocal of this step, and thus may be at least partly deduced from the understanding of step A.

A General View on the Dataflow Synchronisation

The goal is to properly manage the transformation of a source dataflow into a target one, in the context of the simulation of a system.

More precisely, here the source dataflow is the one obtained after the evaluation of a timestep, while the target dataflow is the one that shall be evaluated at the next timestep.

In-between, an external operator may apply any kind of changes to the simulated system, and of course these changes shall be reflected onto its dataflow counterpart.

Such changes may be defined programmatically [21] or be discovered at runtime from an external source [22]. The next sections will focus on the latter case, which is the trickier to handle.

| [21] | If the dataflow is to be managed programmatically (i.e. thanks to specific code), then a user-defined program is to use the various dataflow-relative primitives in order to create the initial state of the dataflow and possibly update it in the course of the simulation; see, in mock-simulators/dataflow-urban-example, the dataflow_urban_example_programmatic_case.erl test case for that. |

| [22] | This setting is illustrated by the dataflow_urban_example_platform_emulating_case.erl test case. |

As already mentioned, dataflows are made of blocks, linked by channels, and two different sorts of blocks exists here:

- the dataflow blocks, which are in charge of describing the current state of the simulated world

- the dataflow processing units, which are in charge of performing domain-specific computations onto that state

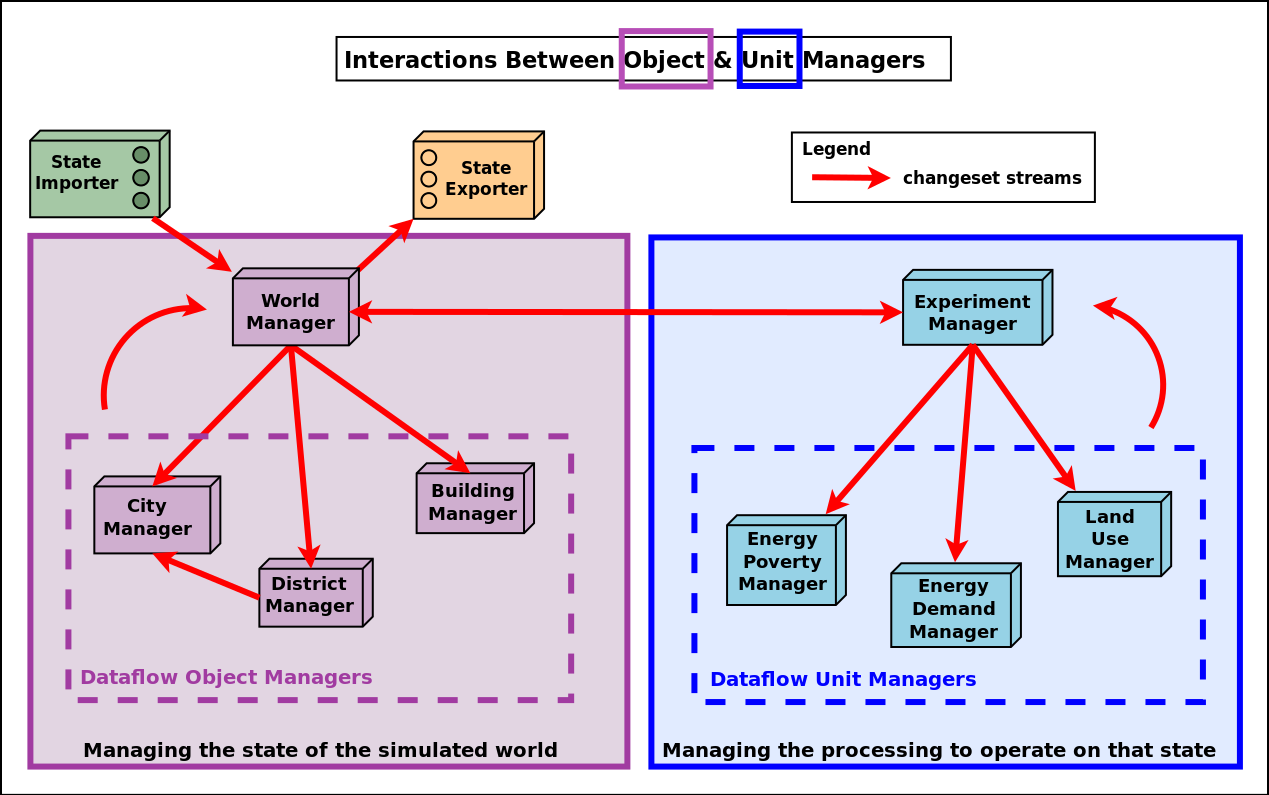

Let's introduce first, and in a few words only, the main elements that are provided in order to transform a dataflow into another:

- the changes in the state of the simulated world are orchestrated by the World Manager, driving the various Object Managers for that

- the changes in the computations to be operated are orchestrated by the Experiment Manager, driving the various Unit Managers for that

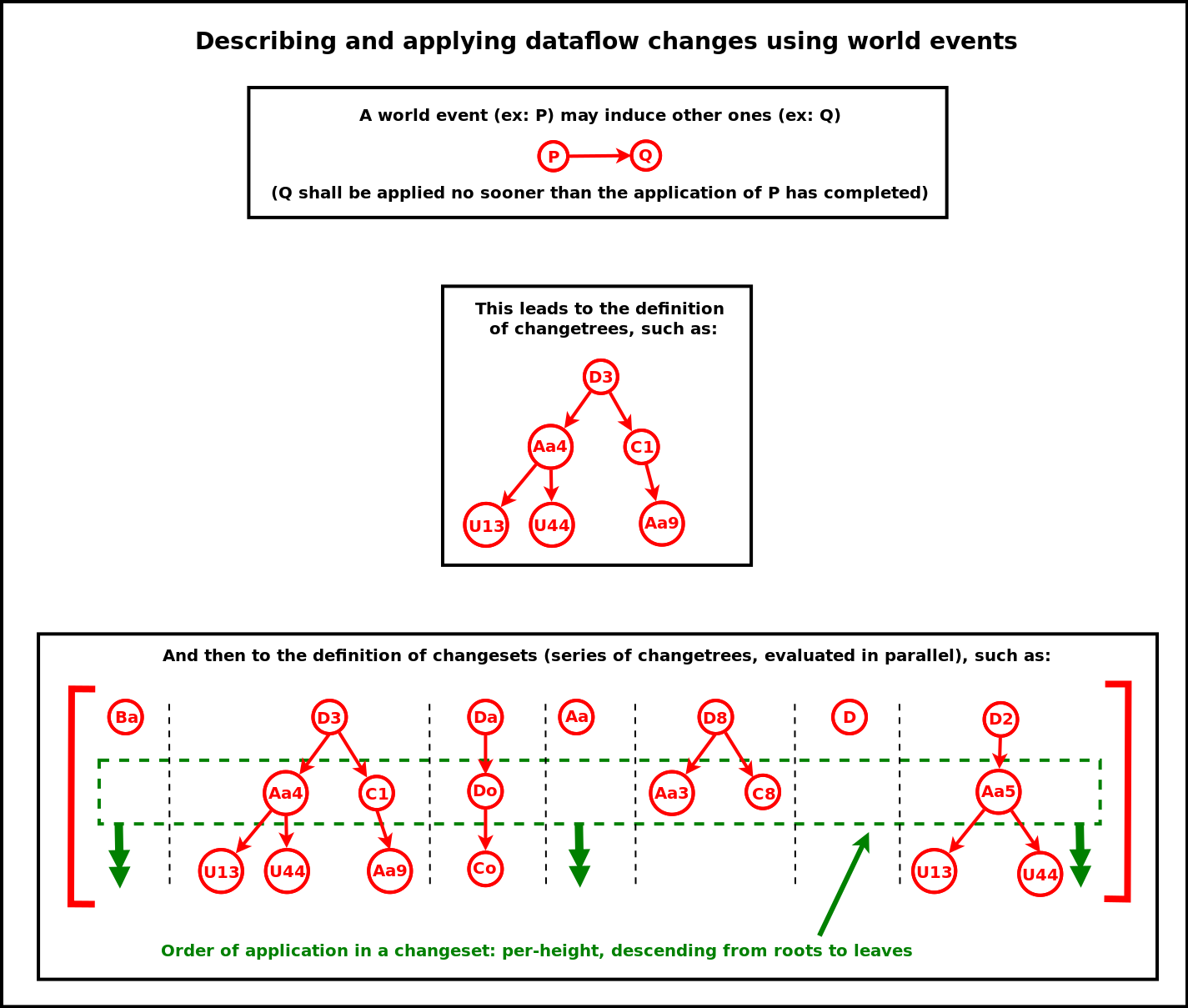

- each change is described by an individual World Synchronisation Event, meant to affect potentially both the state of the simulated world and, in turn, the computations operated on it

- a Changeset may aggregate many of these events, and a dataflow can be turned into another by simply applying a series of changesets

The diagram below gives a synthetic, example view of the overall mode of operation in action:

Let's discuss now more precisely the various elements of the solution, and how they interact.

The World Manager and its Dataflow Object Managers

Purpose of the World Manager

This manager (singleton instance of the WorldManager class), whose shorthand is WM, is used in order to create, update and keep track of the simulated world and its structure; this world is itself made of the target system of interest (e.g. a city) and of its context (e.g. its associated weather system, the other cities in the vicinity, etc.).

As a result, the world manager is generic, yet its use is specific to a given modelling structure (e.g. to some way of describing a city), and bears no direct relationship with the computations that will be performed on it (one of its purposes is indeed to help uncoupling the description of the world of interest from the models operating on it, so that they can themselves be defined independently, one from another).

This virtual world is to be modelled based on various types of dataflow objects. For example, if the target system is a city, then districts, roads, buildings, weather elements, other cities, etc. may be defined (as types first, before planning their instantiaton) in order to represent the whole.

These dataflow objects, which account for the state of the simulation world, are to be defined individually (in terms of internal state; e.g. a building has a surface and an height attributes, of corresponding SUTC metadata) and collectively (in terms of structure and relationships; e.g. a building must be included in a district, and may comprise any number of dwellings).

Purpose of the Object Managers

For each of these types of dataflow objects, an object manager, in charge of taking care of the corresponding dataflow objects (i.e. the instances of that type) must be defined [23]. For example the BuildingManager will take care of (all) Building instances.

Note that a given object manager is to take care of at least one type of objects, possibly multiple ones (e.g. if deemed more appropriate, a single object manager may be defined in order to take care of the buildings and of the households and and the districts).

| [23] | All actual dataflow managers are either instances of the DataflowObjectManager class (for the simpler cases), or child classes thereof (e.g. to support specifically some kind of associations). All these managers are themselves simulation actors, as interacting with them in the course of the simulation may be necessary (e.g. to create new object instances). |

The purpose of an object manager is to be the entry point whenever having to perform dataflow-level operations on the object instances of the type(s) it is in charge of (e.g. actual buildings): in the course of the simulation, instances may indeed have to be properly created, associated, connected, modified, deleted, etc.